Barely ten months after its birth changed the world of technology, OpenAI unleashes ChatGPT Enterprise, where enterprises now have “Enterprise-grade security and privacy, unlimited higher-speed GPT-4 access, longer context windows for processing longer inputs, advanced data analysis capabilities, customization options, and much more.”

Assuming the 20 enterprises that road-tested ChatGPT Enterprise experienced these benefits in double-quick time, you have to take a serious look at scaling up GenAI tools or risk getting left behind with the most hyped technology since the Internet came to be…

The market is awash with BS about GenAI – and you already know it

As Kurzweil’s 2029 prophecy that AI will pass a valid Turing Test and draw level with human intelligence appears less improbable, it’s vital we take a reality check to balance fantasy with reality.

Firstly, you gotta love the discussions of the displacements of jobs from AI. It feels like the whole narrative on equating automation and job losses just got reloaded with a GenAI sugar frosting.

Yet, at the same time, the build-out of capabilities on the service provider side is equally mindboggling as everyone claims deep capabilities of talent and solutions in barely a few months. To this end, HFS is currently engaging with the leading service providers to learn about their strategic objectives and capabilities to release our inaugural Generative Enterprise Horizon study on the topic.

Simply put, the whole enterprise world is absorbing GenAI information overload, and we need to take a deep breath and crystalize some issues that will drive enterprise adoption:

- Take a long-term view on technology adoption: Technology and capabilities are continuing to evolve at an astounding rate, and their hype phases are shortening – we’ve only just come through the excitement of IoT, blockchain, RPA, Cloud, etc., and adoption of these technologies is still relatively immature and only just realizing their potential. ChatGPT was only released to the public last November 30th, and the GPT 4 series of foundation models later in March of this year, which already demonstrated a 10-fold increase in synthesis power among a host of other significant improvements and potential. However, enterprises are still struggling to adopt cloud! And we should remember that progress with GenAI is only possible when you fix your data infrastructure and integrate cloud and your other AI tools. With that, you have to digest all those surveys with data on adoption rates with a big pinch of salt as consultants and tech firms vie to lead the GenAI narrative. For example, many traditional NLP projects are getting relabeled as GenAI to make them sound more appealing among many other initiatives using older AI tech.

- It is about the enterprise, stupid! GenAI has not infiltrated the enterprise in the traditional “vendor push” manner as most technologies – it’s being brought into everyone’s daily lives by users, especially the younger generation, for education purposes. Most GenAI examples are not enterprise-centric, and only a handful of projects have reached production. We must acknowledge that enterprise environments are not a smartphone. Enterprises are not closed systems but systems that have to deal with complexity and scale as well as the old foe that is legacy.

- There will be big winners and losers in the AI arms race, and there is nowhere to hide. Whether you sell, advise, buy or use technology, there is an arms race to build out foundational GenAI models with these fresh dollops of crazy capital influx. If and when the hype bubble bursts (which they always do), the technology will be blamed. And, of course, it is the typical analyst question: Who are the winners and losers? Luckily we are not financial analysts. Our job is to guide on enterprise adoption not financial gain, but ultimately there will be winners and losers in the GenAI era, and you can’t hide from it.

- Beware of the hyperscalers ringfencing their oligopoly: In our view, more – not less power is getting concentrated with hyperscalers, such as Amazon Bedrock, Microsoft Copilot, and Google Cloud generative AI. Just like cloud GenAI will be foundational. Yet, enterprises are already frustrated with the oligopoly. Both in terms of vendor lock-in as well as spend. Only with clear objectives can enterprises justify the costs.

- Engage with the cool kids on the block like Nvidia, Databricks, and Hugging Face: Brand new ecosystems, including Nvidia, Databricks, and start-ups, are emerging. Enterprises don’t know how to navigate this. Everybody is trying to be the new best friend of Hugging Face, who could become the new RedHat. At the same time, who is getting hold of Nvidia’s GPUs as the key building blocks for GenAI have become a scarce resource? But

- Your governance and explainability focus is critical. Most data privacy laws are trying to take a black-box approach with limited explanation or visibility, where something goes inside a black box, and something comes out and no one understands what happened inside the box. Yet, most Machine Learning is a black box with no explainabilty, but this largely went unquestioned until the recent explosion of focus around LLMs. To protect civil liberties, bias and other issues you need explainable AI. To this end, major legislation is looming: US AI Bill of Rights, and the EU AI liability directive as examples. And we are already seeing AI litigation is starting to kick-in. For instance, the FTC has opened an investigation into ChatGPT maker OpenAI over the potential harm it could cause and the company’s security practices. Lastly, responsible AI legislations around the world are not yet aligned on a common reporting format, thus, it is adding to confusion and delaying the initiation of responsible AI compliance by companies.

- Get on top of enterprise data management. Anything touching our customer or employee data is more scrutinized than ever, and GenAI opens up a whole new can of worms when it comes to immersive it into the enterprise. Most Generative AI use cases use public data today. Getting enterprises to share private data will be challenging, if not impossible. We hear about approaches for data anonymization and for data impact assessments. But as we could see with GDPR, in the end, the courts will be the arbiters of the effectiveness of those approaches. Equally, how can enterprises deal with model drift and eliminate the randomness from these models’ outputs? Their responses evolve due to updates from new data. It is about the integration into complex enterprise ecosystems.

- Scaling your GenAI is expensive – start building the business case now: Forget ChatGPT 3.5. For enterprises, GenAI is not free. On the contrary, to attract talent for data management, the rare breed of prompt engineers, or even to run your foundational model, requires deep pockets. And that is before the debate around Carbon Footprint of AI is getting started. In addition, getting access to the IT infrastructure to build and develop these language models gets expensive, and building business cases and longer-term viable cost models is going to dominate sourcing discussions in the coming months.

- You must avoid a myopic view on productivity: The singular focus on productivity is misleading – remember how replacing people with tech destroyed the RPA phenomenon. We are hearing from service providers that they intend to shrink their talent pyramid by leveraging GenAI. Yet, what we need to focus much more on is the value creation. We are hearing about fantastic breakthroughs in science, but we have yet to hear about compelling examples of value creation in the enterprise.

- Understand what GenAI is… and what it isn’t: GenAI is Machine Learning, and it is being trained on information that disparate sources have provided. So don’t expect a “42” thrown at you as the answer to life, the universe, and everything. And the last time we checked, it wasn’t sentient either. The next frontier for AI is becoming objective and goal-driven. Yet, we are early in terms of foundational research. It will be intriguing to watch the progress of Google with its Gemini project, which aims to add planning and problem-solving to the capabilities of LLMs.

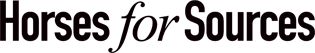

From an enterprise point of view, what all of this boils down to is integration and governance. In the exhibit below, we have highlighted the critical elements. It is about building on and expanding all the hard work at the intersection of cloud, data, and AI. GenAI is not supplanting all this. All the noise about the democratization of AI is misleading, as we still need the infrastructure and the talent to run all these models. Talent that understands GenAI is not growing on trees. Thus, it will not only be an arms race for AI capabilities but for talent. And we should remind ourselves of the learning of cloud adoption. Cloud-native talent remains scarce, and many cloud transformations fail. It is all about the learning from those experiences. Therefore, we have to learn so much more about GenAI and beyond. Cutting through the market noise is an essential early step on that journey.

The Bottom Line: Enterprise adoption of GenAI is all about integration and governance; therefore, operations leaders need to take a long-term view focused on value creation

The development of GenAI is demonstrating an unparalleled compression of innovation cycles we’ve never before seen. Yet, all those headline-grabbing reports on enterprise adoption are focused on capabilities (and sales ambitions) rather than the critical issues of integration and governance. Therefore, we urgently need to learn more about the real experiences from the early deployments to drive more nuanced and relevant discussions. As far as we can tell, the Singularity is not yet nigh. But stay tuned for our inaugural Horizon!

Posted in : Artificial Intelligence, Automation, Cloud Computing, Generative Enterprise, OneEcosystem, OneOffice, Process Mining, Robotic Process Automation, Sourcing Best Practises, Uncategorized